Elasticsearch – Parse WildFly Application server Logs

To parse WildFly Application server logs in Elastic search, do the following:

- Create new pipleline and GROK Processor, here is a a GROK rule to parse it:

%{DATESTAMP:transactionDate},%{INT:LEVEL} %{WORD:Type} %{GREEDYDATA:CodePath}

- Create a new file stream log integration in Elastic agent, point to the new processor and enjoy.

Have fun.

Elasticsearch – how to parse MySQL general log

Elasticsearch provides native integration using beats or Agent to collect MySQL errors/slow logs, however if you want detailed auditing via MySQL general log, you can parse the log as following:

- Configure a new Pipeline and Processor using GROK, here is the GROK to parse MySQL Logs:

%{TIMESTAMP_ISO8601:transactionDate} %{INT:LogId} %{WORD:Type}\t%{WORD:Type1} %{GREEDYDATA:Type3}

NOTE: please review the spaces properly , make sure to review it before deployment.

- Create a new file stream integration in Elastic Agent, point the stream to the proper path where MySQL general logs are kept and configure the pipleline to be the newly created custom pipleline.

Have fun.

Elasticsearch stops immediately after enabling network.host settings in elasticseach.yml file

Case:

After enabling network.host: 0.0.0.0 or dedicated IP to allow other nodes to join Elasticsearch cluster.

Solution:

You must increase the max VM map using:

sudo sysctl -w vm.max_map_count=262144

You Receive “Unable to Launch Browser input/output error” on Ubuntu Xfce Desktop

Case:

You access your Ubuntu machine over VNC using Xfce4 desktop, everything works perfectly but when launching Default web nrowser your receive the error:

“Unable to Launch Browser input/output error”

Solution:

Install chrome browser and set it as default browser, this happens because default server installation deoesn’t include a web browser:

- download it using this command:

wget https://dl.google.com/linux/direct/google-chrome-stable_current_amd64.deb - execute the downloaded installer:

sudo apt install ./google-chrome-stable_current_amd64.deb - launch the browser:

google-chrome

- run

xfce4-settings-manager - find “Default Applications”

- under “Web Browser”, click “Chrome”

You Recieve Connection Closed when connecting from Fortigate VPN SSL to Windows Server RDP 2012/2016/2019/2022

Scenario:

You configured SSL VPN access through Fortigate (Either V6 or 6.5) and you configured a bookmark RDP connection, when connecting to servers you receive error Connection closed although traffic is allowed to the server via policy and RDP connection works localy

Solution:

Configure Group Policy to allow Encryption Oracle Remediation seto to vulenrable, older versions from FGT doesn’t support remediate option.

How to configure the GPO:

Computer Configuration -> Administrative Templates -> System -> Credentials Delegation then set Encryption Oracle Remediation to Vulnerable.

RADZEN published application or Blazor WASM works only for localhosts

Today I faced another cool issue, I have a Blazor app build via Radzen, the application works fine in VS Studio and Blazor studio.

When I published the application, I placed it on IIS server and it worked great, the issue that I can login only if I open the application from localhost on IIS, if I try using the FQDN or the IP it won’t, same for HTTP and HTTPS as well.

It took me a while to fiddle in the logs then I found:

Authorization failed. These requirements were not met:

DenyAnonymousAuthorizationRequirement: Requires an authenticated user

So, what is the issue, after some troubleshooting, I found that I enabled multitenancy in the app, all users where placed in the main tenant for the time being, but the base URL or hosts for the tenant was set to http://localhost:5000

after editing the Hosts in the tenant field to change it to my IP address, the application worked perfect, had to read this:

https://learn.microsoft.com/en-us/ef/core/miscellaneous/multitenancy

زي ميستيريوس كيس اوف انا مش انا – الجزء الاول – مقدمة

زي ميستيريوس كيس اوف انا مش انا – الجزء الاول – مقدمة

==================

تنويه: كتبت المجموعة دي من المقالات عبر 2018 ، 2019 ، 2020 ، كل مرة كنت اكتب جزء و اما افتقد للوقت ، الجهد ، الخبرة او الدقة العلمية في جزء معين فاما اتوقف او اتوه ، لذا قد تأتي بعد الاجزاء غير متصلة او

Outdated

فلو حد عنده مراجعة فليتفضل

=================

اما الناس كانت بتتكلم عن ال

Hacking/Ethical hacking

كان ديما في نوشن يا اما نهاك الويب او الوايرلسس ، و طبعا في نوشن اختراق الويندوز ، طبعا الكل عارف انه في طرق كتير تقدر تخترق بيها الويندوز ، بس دايما كان عندي

Fascination

مش بكيفية الاختراق ، لا ازاي نخفي الاختراق ، علشان كده دايما اما كنت بحقق في مشكلة

Malware

ممكن ببقا مش عارف هو فين ، بس عارف ادور فين…

ده خلاني دايما ادور في حتتين ، النتوورك او الهاردديسك ، و ده سبب انه مثلا في تريجرات كان اول حاجة عملتها انه نقدر نشوف كل النتورك تررافيك بالبروسس بتاعته ، لانه دايما ال

Malware

عايز يتكلم على النتورك

بس كان في صندوق كده حاولت افتحه كتير ، اخد مني وقت و مجهود علشان افهم الصندوق ده اصلا ، و علشان افهم اما حفتحه حلاقي فيه ايه ، الميموري

صحيح في معلومات كتير اوي نقدر نعرفها عن السيستم و هل هو

Infected/hacked

و لا لاء من النتورك و الديسك ، بس لا غنى انه نبص جوا الميموري و نطلع منها البهاريز كلها ، بس البحث جوا الميموري عن

Malware

مش سهل زي ما انت متخيل

===============

من اكتر الحاجات اللي كانت بتخوفني ، هي انه يكون السيستم مخترق بس الهاكر قادر يعمل

Patching/hiding

للفيروس جوا بروسس شكلها منطقي زي

Svchosts.exe

لدرجة اني كرهت البروسس دي نفسها اصلا ، بس مش كتير لقيت الموضوع ده ، كان دايما المالوير رامي نفسه باسم مشابه

Svch0sts.exe

مثلا و حاطط نفسه في ال

Temp

بس ايه اللي ممكن يحصل لو الهاكر متقدم و بيلعب صح……………..

==================

السنة اللي فاتت كتبت مقال حوالين ال

Fileless attack https://www.facebook.com/Mahmoud.magdy.soliman/posts/10158696645230645

و ده حمسني اكتر انه لازم نبص جوا الميموري اكتر و نشوف هل فعلا البروسس اللي شغالة بتشغل كود مشبوه و لا لاء و هنا ده دور السلسة دي

السلسة دي كام جزء ، ححاول اجمعهم الفترة اللي جاية كالتالي:

- الجزء الاول: How PE works حنشوف فيه ازاي الملف التنفيذي بيشتغل و بيعرف نفسه

- الجزء الثاني: تكمله للجزء الاول مع نظرة لل DLL

- الجزء الثالث: بعنوان : انت مش ابنيييييييييييييي و ايه العلاقة بين ال Parent/child process

- الجزء الرابع : بعنوان: لا مؤاخذة اقلع و فلقس علشان تتحقن و نظرة على ال Process injection

- الجزء الخامس: بعنوان (على اد الايد مستوحى من برنامج ال CBC) و ازاي تعمل EDR في البيت

==========================

لو متابع من زمان فاحنا بصينا اكتر من مرة على ال

Process/thread

و ايه الفروق بينهم ، بس هي ازاي اصلا البروسس بتتعمل ؟! يلا بينا

PE files or portable executables

هو الملف التنفيذي اللي يقدر ينفذ تعليمات برمجية مباشرة ، في شرح حلو اوي طويل عريض عنا

بس احنا حنسيب كل حاجة و نروح على الحتة دي بتاعت ال Export data

اللي هي لو موجودة بتخلي ال

EXE/DLL

يعمل Export لل

Functions/variables to other EXE

typedef struct _IMAGE_EXPORT_DIRECTORY {

ULONG Characteristics;

ULONG TimeDateStamp;

USHORT MajorVersion;

USHORT MinorVersion;

ULONG Name;

ULONG Base;

ULONG NumberOfFunctions;

ULONG NumberOfNames;

PULONG *AddressOfFunctions;

PULONG *AddressOfNames;

PUSHORT *AddressOfNameOrdinals;

} IMAGE_EXPORT_DIRECTORY, *PIMAGE_EXPORT_DIRECTORY;

هنا حنبص على 3 حاجات

NumberOfFunctions هو عدد الفانكشنز المتاحة للاكسبورت

NumberOfNames هو فيه اسامي الفانكشنز المتاحة للاكسبورت

لو عايز تكمل ، حيكون عليك انه تقرا مقال قديم شويتين بقاله عشرين سنة https://docs.microsoft.com/en-us/archive/msdn-magazine/2002/march/inside-windows-an-in-depth-look-into-the-win32-portable-executable-file-format-part-2 علشان تبقا جاهز للجزء الثاني…..

هابي malware ايفري ون

زا ميستريوس كيس اوف الويب سايت اللي تزوره يخترقك

زا ميستريوس كيس اوف الويب سايت اللي تزوره يخترقك

واحدة من اكبر الاسئلة اللي بحاول اشرحها لأي حد و خصوصا لو مش متخصص في امن المعلومات انه مجرد زيارتك لويب سايت غير امن ، ممكن تكون كفيلة باختراقك ، مشكلة شركات كتير دلوقتي مش بس مكافحة السبام ، بس مكافحة اليوزرز اللي بيزوروا مواقع غير امنه ، الاي تي اللي شغال كراكات و مش شابه و غيره و غيره

المشكلة انه عندنا اعتماد و ثقة كبيرة اوي في الانتي فايروس ، بس ده غير كافي بالمرة ، و علشان نوضح الفكرة ، خلينا نشوف

=====================

اشتغلت امبارح على ديمو كده علشان محتاج اعمل

Fileless Dropper

علشان نبين ثغرات امنية معينة ، بس لحظة هو ايه ال

Fileless Dropper

ده يا باشا

====================

ناس كتير متخيلة انه ال

Malware or virus

بينتشر من خلال انه يضغط على فايل تنفيذي

Executable

معين ، بس في انواع متطورة من الاختراق بتعتمد انه بس تزور لينك او ويب سايت او حتى ايميل جايلك علشان تخترق اصلا ، ده بيتم من خلال ال

Fileless attack

اللي بيكون من خلال جافا سكريبت خبيثة بتتنفذ من غير علمك و تفشخك فبمجرد فتح الويب سايت بيتم تنحميل او تنفيذ برنامج بدون علمك، طب ازاي برضك يعني

======================

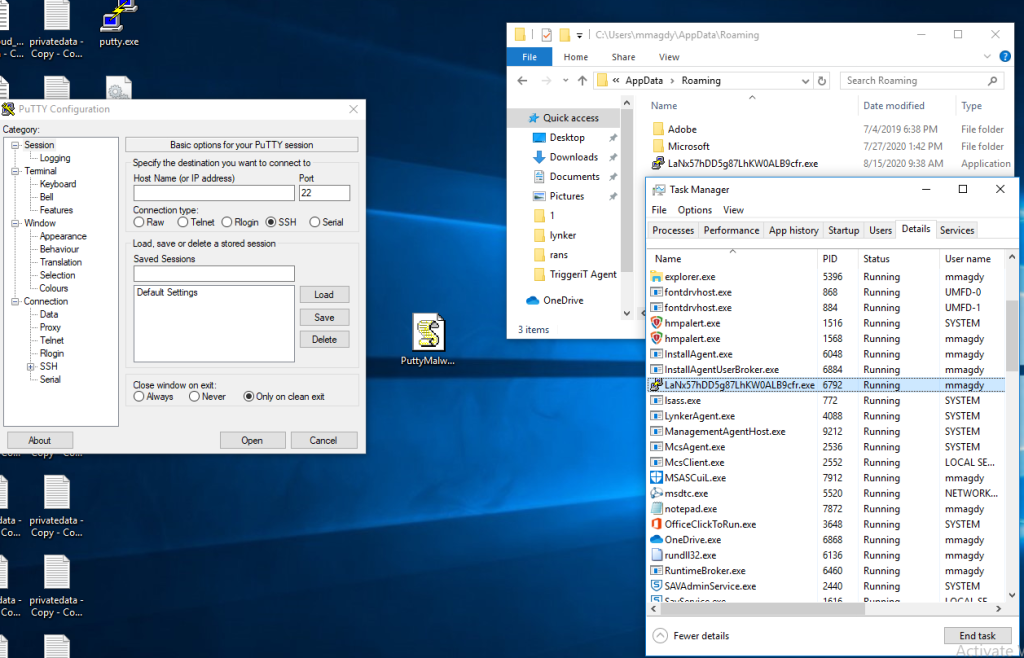

الصورة الاولى هي من جافا سكريبت بسيطة جدا بتوضح ازاي بيتم اختراقك ، الجافا سكريبت دي ديمو هدفها انها تشغل برنامج بيطبع شاشة

Hello world

لا اكثر و لا اقل ، بس تعالى نشوف اصلا هي شغالة ازاي:

=====================

الكود بتاع الجافا سكريبت دي هنا:

https://github.com/MalavVyas/FilelessJSMalwareDropper

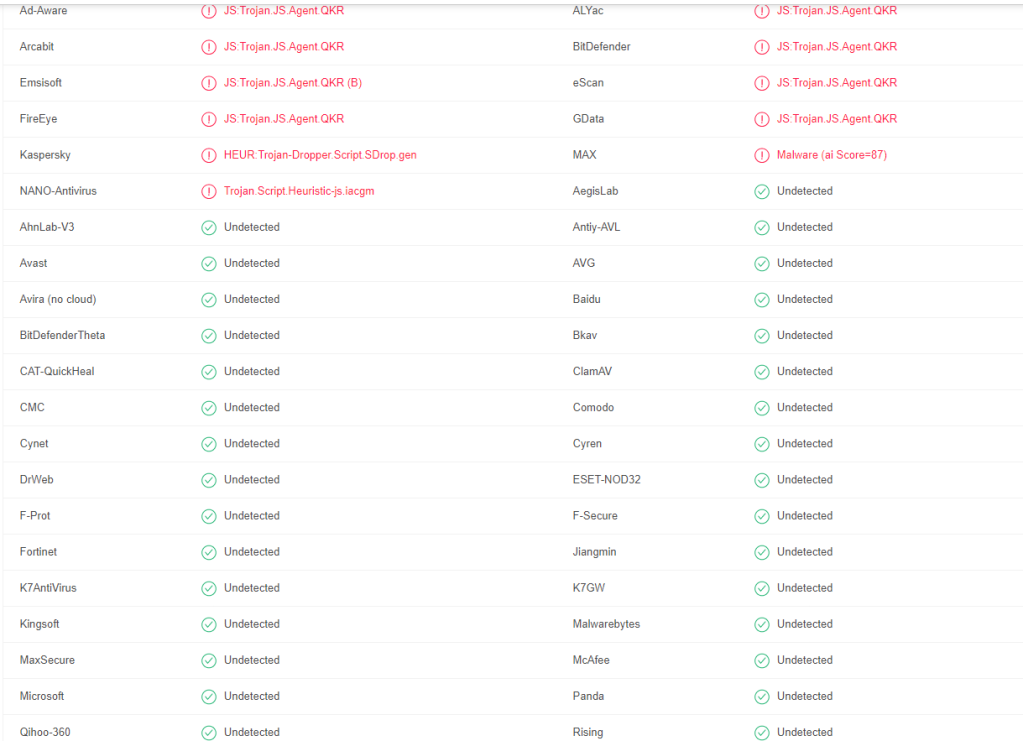

و تعالى نراجع نشوف هي شغالة ازاي ، لاحظ انه في الصورة الثانية محدش تعرف على الجافا سكريبت انها تحتوي كود تنفيذي الا شوية برامج بسيطة جدا و محدش فهم تقريبا انها

Dropper

تعالى نبص على الكود

==============

الجولة الاولى: الكود شكلة برئ

لو بصيت على الكود مش حتلاقي حاجة بتقول انه الكود ده بينفذ اي حاجة ، مريبة عجيبة ، الكود بيطلع رسالة تنبيه بسيطة من الكود ده

alert(new Function(SnccpSrYKfxmqjJk())());

لو راجعت الدالة

SnccpSrYKfxmqjJk

حتلاقي انها

Anonymous function

بتنادي على متغيرات من فوق منها المتغير

UBkDUhSPnYjP

اللي هو كود طووووووووووووييل مشفر ، هنا يبتدي اللعب

بص على تفاصيل الدالة:

الدالة بتبتدي بالمتغير

_0x46b2dd

اللي بياخد كل اربع حروف من التيكست المشفر و يجمع و يطرح عليه و يرجع القيمة من

UTF 16 string

HMPTQXTNBJUV += String[‘fromCharCode’](_0x36455c);

و بيجمعه في المتغير

HMPTQXTNBJUV

اللي بيرجع في الاخل و يتنفذ في الدالة

SnccpSrYKfxmqjJk

انترستنج

======================

الجولة الثانية:

بعد تجميع و فك تشفير الكود ، طلع كود تاني خالص ، كود طويل عريض فشخ ، حاختصرلك اهم اللقطات اللي فيه ، هو زي الكود اللي فوق ، كود مشفر طويل عريض معمول له تشفير بدالة مختلفة تانية خاااااااالص ، و بيرجع كود

Anonymous

بيتم تنفيذه هو كمان

alert(new Function(SnccpSrYKfxmqjJk())())

ladlloYdTMckcxV = uvqwdfyuwtrzln.indexOf(UBkDUhSPnYjP.charAt(cNskABPCjWZHTMl++));

dycsldgepqeurce = uvqwdfyuwtrzln.indexOf(UBkDUhSPnYjP.charAt(cNskABPCjWZHTMl++));

ejFUWevikvv = uvqwdfyuwtrzln.indexOf(UBkDUhSPnYjP.charAt(cNskABPCjWZHTMl++));

OPOUYPHZPPSPXENMI = uvqwdfyuwtrzln.indexOf(UBkDUhSPnYjP.charAt(cNskABPCjWZHTMl++));

kznyohlxdidjrlrkw = ((ejFUWevikvv & 3) << (2766/vxdeabpuqqh)) | OPOUYPHZPPSPXENMI;

dczhnsicylpqyciye = (ladlloYdTMckcxV << (DkrJItsLVccgD/35)) | (dycsldgepqeurce >> (1148/bkiwKJMAPyUiNYL));

MSMSPYENLYFXRKBBM = ((dycsldgepqeurce & (nlmczdyawfwbb-(1441))) << (1148/bkiwKJMAPyUiNYL)) | (ejFUWevikvv >> (DkrJItsLVccgD/35));;

HMPTQXTNBJUV+=String.fromCharCode(MSMSPYENLYFXRKBBM);

طب ايه طيب ، اتقل

================

الجولة الثالثة

بعد تجميع الكود اللي طلع من الكود الثاني اللي طلع من الكود الاولاني ، طلع الكود الاخير اللي فعلا هدفه اختراقك ، في حالتنا دي هو مش بيعمل الا

Hello World

بسيط ، بس تعالى نشوف هو شغال ازاي

الكود بيبتدي ب دالة بتكتب البايلود

function WritePayload(name)

{

var v1 = 1

var v2 = 2

var v3 = 2

var codePage=’437′;

اللي بتاخد المتغير

PayloadString

ملحق بيها دالتين

واحده

base64

و الثانية

RC4

اللي حصل انه الراجل كتب الكود بتاع السي و بعدين عمله تشفير ب

RC4

بباسورد معينة ، و بعدين طلعه في

Base64

في الكود بيتكتب العكس و يطلع ملف تنفيذي بحق ربنا بيتكتب في ال

AppData

من خلال الدالة

Dropfile

اللي بتدور على باث ال

Appdata

بتاع اليوزر بتاعك و تكتب فيه الملف التنفيذي باسم عشوائي و تنفذه

dvar3.WriteAll(rc4(base64(PayloadString),false));

dvar1.Run(dvar2);

=====================

ياسلااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااااام ، يعني انا مش مضطر اضغط على ملف تنفيذي علشان اتلسع ، لا يا ريس انت بس تفتح ايميل فيه حتة الجافا سكريبت ده و لو الراجل حاطك في دماغه انت منتهي

=====================

طب و الدروبر بتاعي ، علشان انا غلس فممكن حد يوصل انه يفهم التشفير بيتم ازاي و يقرا انه اي تيكست مشفر كتير امنعه مثلا ، فانا روحت عامل الدروبر بالبايتس ، فانا حولت الملف التنفيذي لبايتس و خليت الجافا سكريبت تكتبها من الاول

var payload = new Array();

payload [01] = ’00C7′;

payload [02] = ’00FC’;

payload [03] = ’00E9′;

payload [04] = ’00E2′;

payload [05] = ’00E4′;

payload [06] = ’00E0′;

payload [07] = ’00E5′;

و بعدين بحاول البايتس لستريم و نكتب الستريم ، طب يعني ايه يعني

يعني عملت جافاسكريبت دروبر محمل جواه ال

Putty

مجرد ما بتغلط عليه بيشغل ليك ال

Putty

و برضك محدش شافه الا عدد بسيط من الانتي فايروس انه فايروس في الصورة الاخيرة

====================

ابقا حمل كراكات براحتك بقا يا نجم ، هابي ويك انك ايفري وان

مراجع:

https://resources.infosecinstitute.com/reverse-engineering-javascript-obfuscated-dropper/

Commvault Hyperscale Installation – notes from the field.

WOW, it has been 4 years since I blogged here, I moved initially to my own website but I am returning here for the time being sharing with you some goodies.

In this blog post, I will share with you some insights about Commvault’s hyperscale appliances.

Commvault Hyperscale, is state of the art storage appliance that allows seamless protection, upgrade and expansion of backup storage.

Commvault is expanding in Egypt and I had the pleasure installing 2 of 3 appliances currently installed in Egypt.

After fiddling with the appliances, I want to share some insights about those appliances.

Part1: Fujitsu OEM Hyperscale Appliances

Those are the original HS ones that comes from Fujitsu, the come pre-installed with Hyperscale OS and all what you need is cable and configure them.

Cabling the appliances is simple as you have either 2 or 4 10G ports and 1 Gb port for iLO, here are the tricks:

- 2 of the 10 Gbps ports will be connected to the public network and they will be used for data backups.

- 2 of the 10 Gbps ports will be configure for internal cluster communication

- 2 * 1 Gbps iLO will be connected to management network

Trick #1 is that production network must communicate with iLO ports, otherwise the cluster will not start, this is a pain in secure environments where it is a must to have iLO in out of band management configuration.

once you setup the devices and if you choose to install them with new Commvault installation (which installs RHEL virtualization and Commvault on a Windows VM), you will need to make sure the production network can reach your internal network, AD and most of the servers to backup them and join active directory.

Part2: HP DL G10 Hyperscale Appliances

Those are HP DL servers that comes with disks and you must install HS OS on them.

The first issue is don’t install the OS using memory cards, you must connect to iLO and mount the OS ISO using iLO and boot from it.

During the installation, the OS will enumerate disks, and it will detect the USB as a disk and tries to initialize it which will fail causing the OS install the fail, hence you must use the above method.

Once the OS is setup, configure the production and private NICs properly with required IPs, and connect the HS to existing Commcell server that you must install separately.

Notes from the field:

1- For HS OS (not the appliances) make sure that you update Commvault to the latest SPs and download Linux x86/x64 packages to the server the make sure to update the cache.

2- Join the appliances one by one to the Commcell, then update the remote cache of each appliance then update the appliance OS itself, then create scaleout storage.

3- Make sure that DNS names are properly mapping to the IPs of each HS appliance, otherwise you will see error (DNS name doesn’t map the device IP).

4- If you mistakenly configured the NICs, you can edit the nics configuration and bonding through editing /etc/sysconfig/network-scripts, do it carefully and edit it with extreme caution.

5- Check network configuration using ethtool and ifcfg utilities.

6- Firewalls are your enemy.

Happy installation.