علبة باندورا ، ولا سحر و لا شعوذة ، ما هو الفيرتشوال بروسيسور ، الفيزيكال بروسيسور ، مين الولية اللي اسمها نوما

علبة باندورا ، ولا سحر و لا شعوذة ، ما هو الفيرتشوال بروسيسور ، الفيزيكال بروسيسور ، مين الولية اللي اسمها نوما ، و بوناس تأثير كل ده على لايسنسنج الاس كيو ال ========= في هذا المقال – ما هو Socket, Physical CPU, Virtual CPU, physical Core, virtual core, NUMA و غيره و غيره – تطلع ايه ال Thread – ما هو الفرق بين هايبر في و في ام وير في البروسيسنج – ما هو تأثير كل هذا على لايسنسنج مايكروسوفت ======== علبة باندورا و كما تقول الاساطير هي العلبة اللي تم اعطاؤها لباندورا و تم تخزين كل شرور العالم في هذه العلبة ، و هو تعبير مناسب جدا اجده يليق جدا كل ما اجي في موضوع الفيرتشوال و الفيزيكال بروسيسور ، لان كل مرة يتفتح ، الناس تتخانق مع بعض مع انه موضوع سهل طيب ، علشان منتوهشي حنبتدي كده بشوية حاجات حقولها بالبلدي علشان منتخانقشي مع بعض باقي المقال ، ثانيا انا غير مسؤول عن اي عسر هضم او تلبك معوي يأتي بسبب قراءة هذا المقال اولا ، انسااااااااااااا خاااااااااااااالص انه الفيزيكال بروسيسور هو الفيرتشوال بروسيسور ، و انه اما بتعمل في ام ب فيرتشوال برسيسور اكنك بتاخد كور من على الفيزيكال سي بي يو ثانيا ، مفيش في هذه المجرة اي حد بيقول انه في علاقة (شرعية او غير شرعية) بين الفيزيكال كور و الفيرتشوال كور و الفيرتشوال بروسيسور و الفيزيكال بروسيسور ، انسا ده تمامامممممممممممممممممممااااااااااا طب ايه يا عم ؟! علشان نتفق ، خلينا نبتدي من الاول على بياض بشوية تعريفات Physical Socket or Socket و دي البروسيسور نفسه في حد ذاته على السيرفر ، بيجي بانوع مختلفة و في اكتر من موديل باكتر من كور ، و ممكن يكون عندك بروسيسور ، 2و4و8و16 و هكذا من البروسيسور ، كل بروسيسور عليه اكتر من كور Core ده وحدة بروسيسنج مستقلة جوا البروسيسور ، في بروسيسور ب 2 و 4 و 8 و 12 و 16 و 24 كور ، و في تعريفات و انوع مختلفة Hyperthreading ده تقنية بتتيح تنفيذ اكتر من عملية في نفس الوقت ، انساها مؤقتا NUMA في عالم المالتي بروسيسنج (الفيزيكال) في ميموري كاش ، و علشان نسرع الميموري اكسس بقا في حاجة اسمها النوما ، و هي تقنية بتتيح الاكسس عبر الكاش المختلف ، بس الاكسس اللوكال بيكون اسرع من الاكسس للكاش من على البروسيسور التاني الموجود على باس تاني يعني الكاش متوفر بنفس السرعة على نفس الباس ، بس برضو متوفر عبر الباس التاني و لكن بسرعة ابطأ ، و لو الابلكيشن NUMA AWARE لازم يتستعمل و يحاول يخلي الداتا بتاعته في نفس الكاش علشان يقلل ال Cross Talk http://en.wikipedia.org/wiki/Non-uniform_memory_access طب طلعلنا ليه النوما ده ….اتقل ============= لو وصلت لحد هنا ، فانت كويس جدا ، نيجي بقا نلعب الالعاب السحرية يطلع ايه اصلا الفيرتشوال بروسيسور في الفيرتشوال ماشين ؟! نتيجة لاسباب كتيرة منها التعليم الحكومي و الاستسهال ..ناس كتير بتقولك انه الفيرتشوال بروسيسور يساوي فيزيكال بروسيسور ، و ده غير صحيح بالمرة …ليه بقا ؟!!! لانه الهايبرفايزور (ايا كان مايكروسوفت او في ام وير) بيستعمل حاجة اسمها ال CPU Scheduler ال Scheduler مهمته انه ياخد العمليات من الفي ام و يعملها جدولة على الفيزيكال بروسيسورز ، يعني الفي ام بتبعت التعليمات للتنفيذ ، السكاديولار بياخدها و ينفذها على فيزيكال بروسيسور العمليتين دول اسمهم Wait, Ready, Run فأي عمليه هي مستنية دورها في التنفيذ ، اما ريدي للتنفيذ و مستنية دورها من السكاديولار او ران و بيتم تنفيذها و هنا بيجي دور الكوانتام الكوانتام وحدة زمنية بيستعملها السكاديولار علشان يجدول عملياته ، و مقدارها 50 ميلي ثانية ، و هنا العملية بتنتقل من حالة الانتظزار و تخش التنفيذ كل شوية ، و بيبقا على السكاديولار انه يتحكم في العملية و يعين البروسيسور للفي ام علشان يتم تنفيذ العملية المراد تنفيذها و هنا اما بنتكلم ، بنقول حاجة مهمة جدا ، انه الفيرتشوال بروسيسور هو مجرد وحدة زمنية تمنح للفي ام علشان تقدر تنفذ تعليماتها يعني ايه فلنفترض انه لدينا اتنين في ام على هوست فيه اربعة بروسيسور ، كل بروسيسور اتنين كور ، في ام فيها اربعة فيرتشوال بروسيسور و التانية فيها 1 فيرتشوال بروسيسور ، بحسبة مبسطة سيكون التايم مقسم الى 5 اجزاء و بالتالي نظريا الفي ام الكبيرة تستحوذ على باور 6.4 بروسيسنج ، و الفي ام التانية 1.6 ، و هو ما يعزز مفهوم انه الفيرتشوال بروسيسور ربما يتخطى 100% من قوة البروسيسور (طبعا المفهوم مسطح جدا و لكن للتوضيح) طبعا يتعقد الموضوع كل ما زادت الفي ام و زادت الفيرتشوال بروسيسور و زادت الفيزيكال بروسيسور و الكورز المهم اللي تبقا فاهمه ، انه الفيرتشوال بروسيسور هو Time Allocation على الفيزيكال بروسيسور طب انا عايز اضمن قوة معينة او ميجا هرتز معينة لل في ام ، ده وارد جدا لانه ممكن تحط Reservations w guarantee على الفي ام … طبعا المفهوم اعلاه حلو و جميل لو مفيش خناقة على البروسيسنج ، بس لو في اكتر من في ام و في اكتر من تايم الوكيشن و في مشكلة في الريسورسز ، بيبتدي يشتغل ال Shares, reservations and CPU scheduler علشان يبتدوا يعينوا ريسورسز صح للي في ، و ده برضوا معناه ، انه ممكن تيجي الفي ام تنفذ تعليمة على بروسيسور تلاقيه مشغول فبيكون على السكاديولار انه يلاقي بروسيسور فاضي علشان ينفذ التعليمة بس هنا بقا يجي الكلام ، انهون بروسيسور و انهون باس ، و هنا يبتدي الكلام على النوما و عدد الكور مقابل عدد البروسيسور زي ما اتفقنا فوق ، مفيش علاقة خالص بين الفيزيكال بروسيسور و الفيزيكال كور و الفيرتشوال بروسيسور و كور ، لانه الفيرتشول بروسيسور و كور هما مجرد مدة زمنية ، و ده معناه انه فعلا الفي ام باتنين سي بي يو و اتنين كور ، ستساوي في الكفاءة في ام بفيرتشوال سي بي واحد و اربعة كور ، لانهم هم الاتنين ليهم نفس الوزن من السكاديولار http://frankdenneman.nl/2013/09/18/vcpu-configuration-performance-impact-between-virtual-sockets-and-virtual-cores/ ، طب فين التتة التتة حتيجي اما تعمل في امات كبيرة ، تتخطى حجم النوما بتاعتك او ما يعرف بال Wide VMs و اللي الكونفيجريشن بتاعتها بتتخطى حجم نوما بتاعتك ، و في بلوج جميل على الموضوع ده http://blogs.vmware.com/vsphere/2013/10/does-corespersocket-affect-performance.html طبعا الكلام كده جميل اوي و ابتدت تتضح الصورة ، حيتبقا بقا حاجة مش حنتكلم فيها علشان المية متخشش على الزيت الا و هي الهايبر ثريدينج… اخر حاجة هي الثريد ؟! و ده تعبير بيطلق على عدد الفي امات اللي ممكن نحملهم على الكور لو معنديش حسبة منطقية للميجا هرتز اللي حقدر انفذهم يعني لو عندي بروسيسور فيها 12 كور ، فهو لو حيشغل في امات بسيرفر ابليكيشن ممكن يشغل بين 3 الى 6 ثريدز (كل ثريد تمثل في ام من فيرتشوال سي بي يو واحد فقط) يعني توتال 36 في ام ، كل في ام فيها فيرتشوال سي بي يو واحد ، و طبعا الارقام حتقل لو ابتديت تزود في الفيرتشوال سي بي يو طب هل في فرق بين في ام وير و هايبر في ؟! حاجات بسيطة او ما يعرف بال Gang Scheduling اللي مش بتستعمله مايكروسوفت ، و اختلافات بسيطة في خوارزمية تعيين و اختيار الكور و البروسيسور ، بس يبقا الكونسبت ثابت مراجع اخرى http://www.virtuallycloud9.com/index.php/2013/08/virtual-processor-scheduling-how-vmware-and-microsoft-hypervisors-work-at-the-cpu-level/ http://www.vmware.com/files/pdf/techpaper/VMware-vSphere-CPU-Sched-Perf.pdf طب و لايسنسنج الاس كيو ال؟! باختصار انت عندك اما اس كيو ال 2012 او 2014 ، في الاس كي ال 2008 و 2012 كانت الرخصة بالفيزيكال بروسيسور ، اللي بيديك رخصة لعدد 4 كور (فيرتشوال) في حين في ال 2014 ، دايما الرخصة بالكور طب يعني ايه ، يعني مايكروسوفت جايما بتعد الكور على الفي ام ، بحد ادنى اتنين كور ، يعني لو عندك 4 بورسيسور و كل بروسيسور كور ، هو نفس اللايسنس لبروسيسور ب 4 كور مراجع http://download.microsoft.com/download/3/D/4/3D42BDC2-6725-4B29-B75A-A5B04179958B/MicrosoftServerVirtualization_LicenseMobility_VLBrief.pdf

Powershell script to set proxy settings and toggle automatically detect settings in Internet Explorer

on hourly basis I have to toggle proxy settings of my laptop because I need to switch between corporate proxy and other networks I access, so I thought about scripting it using a simple powershell script, here is the script (the script will set the proxy and toggle automatically detect settings off , or unset the proxy and turn automatically detect settings on), here is the script:

$regKey=”HKCU:\Software\Microsoft\Windows\CurrentVersion\Internet Settings”

$proxyServer = “”

$proxyServerToDefine = “IP:8080”

Write-Host “Retrieve the proxy server …”

$proxyServer = Get-ItemProperty -path $regKey ProxyServer -ErrorAction SilentlyContinue

Write-Host $proxyServer

$val = (get-itemproperty “HKCU:\Software\Microsoft\Windows\CurrentVersion\Internet Settings\Connections” DefaultConnectionSettings).DefaultConnectionSettings$val[8] = $val[8] -bxor 8

if([string]::IsNullOrEmpty($proxyServer))

{

Write-Host “Proxy is actually disabled”set-itemproperty “HKCU:\Software\Microsoft\Windows\CurrentVersion\Internet Settings\Connections” -name DefaultConnectionSettings -value $val

Set-ItemProperty -path $regKey ProxyEnable -value 1

Set-ItemProperty -path $regKey ProxyServer -value $proxyServerToDefine

Write-Host “Proxy is now enabled”

}

else

{set-itemproperty “HKCU:\Software\Microsoft\Windows\CurrentVersion\Internet Settings\Connections” -name DefaultConnectionSettings -value $val

Set-ItemProperty -path $regKey ProxyEnable -value 0

Remove-ItemProperty -path $regKey -name ProxyServerWrite-Host “Proxy is now disabled”

}

References:

Unified Boxes, The Sum of all fears

Correction: By mistake I included SQL in the supportability statement, apparently I was was speaking about the stack as hall including backup, sorry for that.

Hi there, earlier this week, fellow MVP Michel Di Rooij published a blog post http://eightwone.com/2014/07/02/exchange-and-nfs-a-rollup/ speaking about NFS/Exchange support “Again”, the post motivated me to delve into the pool and add my experience.

The were some hesitation in the MVP community about if we should blog/speak about it or not, Michel was so brave to jump and speak about the topic, and after exchange some emails, we (including Fellow MVP Dave Stork) agreed that this blog is critical and we created it.

IF you want to read more, check Tony Redmond’s article http://windowsitpro.com/blog/raging-debate-around-lack-nfs-support-exchange

So, from where the story begins ???!!!

I am currently working for a major data center provider. In my current role we try to find new ways, innovate and find new technologies that will save us time, effort and money and my team was working on investigating the unified boxes option.

But before delving into the technical part, let me give you a brief background from where I am coming, my position as an architect in a service provide is an awkward position, I am a customer, partner and a service provide, so I don’t innovate only, I don’t design only, I don’t implement only, I don’t support only and I don’t operate only, I do all of that, and that makes me keen investigating how every piece of new innovations will be designed, implemented, supported and operated.

Now speaking about the unified boxes, I was blown away with their capabilities. The capabilities of saving space, time and effort using these boxes are massive, but there is a catch, they use NFS, the source of all evil.

NFS has been used for years by VMware to provide “cost effective” shared storage option, a lot of customer adopted NFS over FC because of the claimed money saving and complexity, but NFS has its own issues (we will see that later).

I was a fan of the technology, and created a suggestion on ideascale.com to bring the issue to the PG attention, we did our best but Microsoft came back and informed us that NFS won’t be supported, they have their own justifications, we are not here to speak about it because we can’t judge Microsoft, but the bottom line, NFS is not supported as storage connectivity protocol for Exchange.

Now the reason of this post is to highlight to the community 2 things:

- NFS is not supported by Microsoft for Exchange (any version), there is no other workaround this.

- Choosing a unified box as a solution has its own ramifications that you must be aware about.

I am not here to say nutanix/simplivity/VMware VSAN..etc are good or bad, I am highlighting the issues associated with them to you, and the final decision will be yours, totally yours.

I was fortunate to try all of the above, got some boxes to play with and tested them to the bone, the testing revealed some issues, they might not to you, but they are from my point of view:

- Supportability: Microsoft doesn’t support placing Exchange on NFS, with the recent concerns about the value of Exchange virtualization (see a blog post from fellow MVP Devin Ganger http://www.devinonearth.com/2014/07/virtualization-still-isnt-mature/) using these boxes and these set of technologies might not the best way for those specific products, you might want to choose going with physical servers or other options for Exchange/SQL rather than going with non-supported configurations, although that vendors might push you to go for their boxes and blinding you with how great and shiny these solutions are. The bottom line, they are not supported by Microsoft and they won’t in the near future.

- Some of the above uses thin provisioned disks, meaning that disks are not provisioned ahead for Exchange which is the only supported configuration for virtual harddisks for Exchange. Disks are thinly provisioned meaning they are dynamically expanded on the fly as storage consumed which is another not supported configuration.

- The above boxes have no extensibility to FC, also you are limited to a max of 2 * 10 GbE connections (I don’t know if some have 4 but I don’t think so) meaning that you have no option to do FC backups, all the backups will have to go through Gbe Network, we can spend years discussing which is faster or slower, in my environment I run TBs if not PBs of backups and they were always slow on GbE networks, all of our backups as to be done over FC.

- The above means you will run backup, operations, production and management traffic on single team on shared networks, maybe 2 teams or will run it over 1 GbE, this might be fine with you, but for larger environments, it is not.

- The above limitations limits you to a max number of network connection, a single team with 2 NICs might be sufficient to your requirements, 2 teams maybe, but some of my customers have different networking requirements and this will not fit them.

- Some of the above boxes does caching for reads/writes, I have some customers ran into issues when running Exchange jetstress and high IO applications, the only solution as provided by the vendor’s support is to restart the servers to flush the cache drives.

- Some of the vendors running compression/deduplication in software and this requires a virtual machine of 32 GB or larger to start utilizing deduplication.

- All of the above uses NFS, meaning you will lose VAAI, VAAI is very critical as it accelerates storage operations by offloading those tasks directly to the array, you can use VAAI with NFS with virtual machines that has snapshots or running virtual machines, meaning that you rely on the cache or you must shutdown the virtual machines to use VAAI, VAAI is very important and critical element, so you must understand what are the effects of losing it.

- Those boxes don’t provide tiering, tiering is another important if you are running your own private cloud, by allowing you to provision different storage grades to different workloads, also it is important if you want to move hot data to faster tiers and cold data to slower tiers. Tiering touches the heart and soul every cloud (private or public) and you must understand how this will affect your business, operations, charging and business model.

- From support/operations and compliance point of view, you still running unsupported configuration from disk provisioning and storage backend, again it is your call to decide.

I am not saying that unified boxes are bad, they are a great solution for VDI, Big Data, branch offices, web servers and applications servers and maybe databases that support this sort of configuration, but certainly not for Exchange.

We can spend years and ages discussing if the above is correct or not, valid or not and logic or not, but certainly they are concerns that might ring some bills at your end, also it is certain that the above configurations are not supported by Microsoft, and unless Microsoft changes its stance, we can do nothing about it.

We, as MVPs, have done our duty and raised this as a suggestion to Microsoft, but the decision was made not support it, and it is up to you to decide if you want to abide to this or not, we can’t enforce you but it is our duty to highlight this risk and bring it to your attention. And as MVPs and independent experts, we are not attracted to the light like butterflies, it is our duty to look deeper and further beyond the flashlights of the brightest and greatest and understand/explain the implications and consequences of going this route so you can come up with the best technical architecture for your company.

SYDI script, Exporting/copying data from MS word to Excel sheet using VBA

wow it has been a while since I blogged.

I did something interesting this week and I wanted to share it with you.

This week, I got a task to check 400 servers for their startup parameters for security, now I though, I won’t log into each server and do it manually, I am so lazy for this.

SYDI script has a nice feature to export the server info along with the startup parameters, so I did SYDI commands and I exported the 400 servers data, but now I have 400 documents, again, I am so lazy for this, I want a single sheet to read.

So, it is time for some VBA scripting, after some search and copying some scripts, I built this nice script, I thought about sharing it.

The script will look in the current document, search for the latest table which should be the startup parameters, copy the word table using VBA, select the first line in the document which should be the server name, open excel sheet, lookup the written rows, and paste the table at the latest one.

Note: I named the macro AutoOpen to start when opening the documents, I built another script to loop through server names, open the documents and I am done.

now I can have a single sheet to read during drinking my coffee.

Enjoy

Sub AutoOpen()

Dim wrdTbl As Table

Dim RowCount As Long, ColCount As Long, i As Long, j As Long‘~~> Excel Objects

Dim oXLApp As Object, oXLwb As Object, oXLws As Object

Selection.MoveEnd Unit:=wdLine, Count:=1

Selection.Expand wdLine

hostname = Selection.Texttablecount = ActiveDocument.Tables.Count

Set wrdTbl = ActiveDocument.Tables(tablecount)

ColCount = wrdTbl.Columns.Count

RowCount = wrdTbl.Rows.Count

‘~~> Set your table‘~~> Get the word table Row and Column Counts

‘~~> Create a new Excel Applicaiton

Set oXLApp = CreateObject("Excel.Application")‘~~> Hide Excel

oXLApp.Visible = False‘~~> Open the relevant Excel file

Set oXLwb = oXLApp.Workbooks.Open("pathtoexcelsheet\sample.xlsx")

‘~~> Work with Sheet1. Change as applicable

Set oXLws = oXLwb.Sheets(1)

rowscount = oXLws.UsedRange.Rows.Count

If rowscount = 1 Then

rowscount = rowscount – 1

Newline = rowscount + 1

tableline = Newline + 1Else

rowscount = rowscount + 1

Newline = rowscount + 1

tableline = Newline + 1End If

oXLws.Cells(Newline , 1).Value = hostname

‘~~> Loop through each row of the table

For i = 1 To RowCount

‘~~> Loop through each cell of the row

For j = 1 To ColCount

‘~~> This gives you the cell contents

Debug.Print wrdTbl.Cell(i, j).Range.Text‘~~> Put your code here to export the values of the Word Table

‘~~> cell to Excel Cell. Use the .Range.Text to get the value

‘~~> of that table cell as shown above and then simply put that

‘~~> in the Excel Cell

With oXLws

‘~~> EXAMPLE

.Cells(tableline , j).Value = wrdTbl.Cell(i, j).Range.Text

End With

Next

tableline = tableline + 1

Next‘~~> Close and save Excel File

oXLwb.Close savechanges:=True‘~~> Cleanup (VERY IMPROTANT)

Set oXLws = Nothing

Set oXLwb = Nothing

oXLApp.Quit

Set oXLApp = NothingApplication.Quit

End Sub

Configuring Azure Multifactor Authentication with Exchange 2013 SP1

Thanks to Raymond Emile from Microsoft COX, the guy responded to me instantly and hinted me around the OWA + basic Auth, Thanks a lot Ray…

In case you missed it, Azure has a very cool new feature called Azure multifactor authentication, using MFA in Azure you can perform multifactor for Azure apps and for on-premise apps as well.

In this blog, we will see how to configure Azure Cloud MFA with Exchange 2013 SP1 on premise, this will be a long blog with multiple steps done at multiple levels, so I suggest to you to pay a very close attention to the details because it will be tricky to troubleshoot the config later.

here are the highlevel steps:

- Configure Azure AD

- Configure Directory Sync.

- Configure multifactor Authentication Providers.

- Install/Configure MFA Agent on the Exchange server.

- Configure OWA to use basic authentication.

- Sync Users into MFA agent.

- Configure users from the desired login type.

- Enroll users and test the config.

so let us RNR:

Setting up Azure AD/MFA:

Setting up Azure AD/MFA is done by visiting https://manage.windowsazure.com , here you have 2 options (I will list them because I had them both and it took me a while to figure it out):

- If you have never tried azure, you can sign up for a new account and start the configuration.

- If you have Office 365 enterprise subscription, then you will get Azure AD configured, so you can sign in into Azure using the same account in Office 365 and you will find Azure AD configured for you (I had this option so I had to remove SSO from the previous account and setting it up again).

Once you login to the portal, you can setup Azure AD by clicking add:

Since I had Office 365 subscription, It was already configured, so if you click on the directory, you can find list of domains configured in this directory:

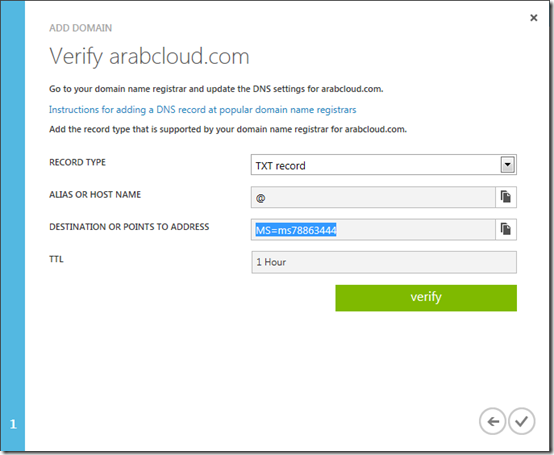

If you will add a new domain, click on add and add the desired domain, you will need to verify the domain by adding TXT or MX record to prove you domain ownership, once done you will find the domain verified and you can configure it, the following screenshots illustrates the verification process:

Once done, go to Directory Integration and choose to activate directory integration:

One enabled, download the dirsync tool on a computer joined to the domain:

Once installed, you will run through the configuration wizard which will ask you about the azure account and the domain admin account to configure the AD Sync:

Once done, you can check the users tab in Azure AD to make sure that users are sync’d to Azure successfully:

If you select a user, you can choose to Manage Multifactor Authentication

you will be prompt to add a multifactor authentication provider, the provider essentially controls the licensing terms for each directory because you have per user or per authentication payment, once selected you can click on manage to manage it:

Once you click manage, you will be taken to the phonefactor website to download the MFA agent:

click on downloads to download the MFA agent, you will install this agent on:

- A server that will act as MFA agent and provides RADIUS or windows authentication from other clients or

- Install the agent on the Exchange server that will do the authentication (frontend servers).

Since we will use Exchange, you will need to install this agent on the Exchange server, once install you will need to activate the server using the email and password you acquired from the portal:

Once the agent installed, it is time to configure the MFA Agent.

Note: the auto configuration wizard won’t work, so skip it and proceed with manual config.

Another note: FBA with OWA won’t work, also auto detection won’t work, so don’t waste your time.

Configuring the MFA Agent:

I need to stress on how important to follow the below steps and making sure you edit the configuration as mentioned or you will spend hours trying to troubleshoot the errors using useless error codes and logs, the logging still poor in my opinion and doesn’t provide much information for debugging.

the first step is to make sure the you have correct name space and ssl certificate in place, typically you will need users to access the portal using specific FQDN, since this FQDN will point to the Exchange server so you will need to publish the following:

- Extra directories for MFA portal, SDK and mobile app.

- or Add a new DNS record and DNS name to the ssl certificate and publish it.

In my case, I chose to use a single name for Exchange and MFA apps, I chose https://mfa.arabcloud.tv, MFA is just a name so it could be OWA, mail or anything.

SSL certificate plays a very important role, this is because the portal and mobile app speaks to SDK over SSL (you will see that later) so you will need to make sure that correct certificate in place as well as DNS records because the DNS record must be resolvable internally.

once the certificate/DNS issue is sorted, you can proceed with the install, first you will install the user portal, users will use this portal to enrol as well as configuring their MFA settings.

From the agent console, choose to install user portal:

It is very important to choose the virtual directory carefully, I highly recommend changing the default names because they are very long, in my case I chose using MFAPORTAL as a virtual directory.

once installed, go the user portal URL and enter the URL (carefully as there is no auto detection or validation method), and make sure to enable the required options in the portal (I highly recommend enabling phone call and mobile app only unless you are in US/EU country then you can enable text messages auth as well, it didn’t work with me because the local provider in Qatar didn’t send the reply correctly).

Once done, Proceed with SDK installation, again, I highly recommend changing the name, I chose MFASDK

Once installed, you are ready to proceed with the third step, installing the mobile app portal, to do this browse to the MFA agent installation directory, and click on the mobile app installation, also choose a short name, I chose MFAMobile

Once Installed, you will have to do some manual configuration in the web.config files for the portal and the mobile app.

You will have to specify SDK authentication account and SDK service URL, this configuration is a MUST and not optional.

to do so, first make sure to create a service account, the best way to do it is to fire you active directory users and computers management console, find PFUP_MFAEXCHANGE account and clone it.

Once cloned, open c:\intepub\wwwroot\<MFAportal Directory> and <MFA Mobile App Directory> and edit their web.config files as following:

For MFA portal:

For MFA mobile App:

Once done, you will need to configure the MFA agent to do authentication for IIS.

Configure MFA to do authentication from IIS:

To configure MFA agent to kick for OWA, you will need to configure OWA to do basic authentication, I searched on how to do FBA with MFA, but I didn’t find any clues (if you have let me know).

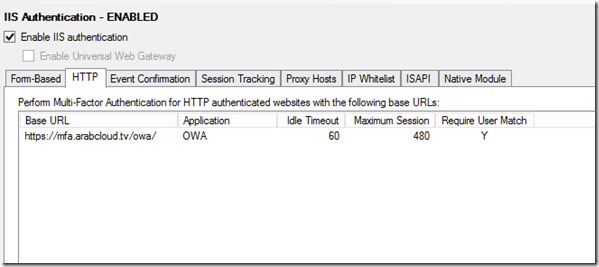

Once you configured OWA/ECP virtual directories to do basic authentication, go to the MFA agent , from there go to IIS Authentication , HTTP tab, and add the OWA URL:

Go to Native Module tab, and select the virtual directories where you want MFA agent to do MFA authentication (make sure to configure it on the front end virtual directories only):

Once done….you still have one final step which is importing and enrolling users…

to import users, go to users, select import and import them from the local AD, you can configure the sync to run periodically:

Once imported, you will see your users, you can configure your users with the required properties and settings to do specific MFA type, for example to enable phone call MFA, you will need to have the users with the proper phone and extension ( if necessary):

You can also configure a user to do phone app auth:

Once all set, finally, you can enrol users.

Users can enrol by visiting the user portal URL and signing with their username/password, once signed they will be taken to the enrolment process.

for phone call MFA, they will receive a call asking for their initial PIN created during their configuration in MFA, once entered correctly, they will be prompted to enter a new one, once validated the call will end.

in subsequent logins, they will receive a call asking them to enter their PIN, once validated successfully, the login will be successful and they will be taken into their mailbox.

in mobile app, which will see here, they will need to install a mobile app on their phones, once they login they can scan the QR code or enter the URL/Code in the app:

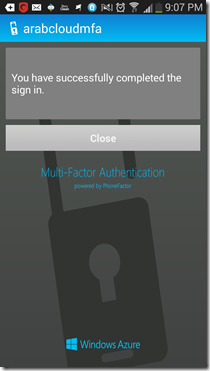

Once validated in the app, you will see a screen similar to this:

Next time when you attempt to login to OWA, the application will ask you to validate the login:

Once authentication is successful, you will see:

and you will be taken to OWA.

Final notes:

again, this is the first look, I think there are more to do, like RADIUS and Windows authentication which is very interesting, also we can configure FBA by publishing OWA via a firewall or a proxy that does RADIUS authentication + FBA which will work.

I hope that this guide was helpful for you.

5 life lessons I learned while playing chess

I play chess, every day, it is a hobby since college days, I love the game and its need to think and the way it requires you to think.

I am not a master I play and lose a lot, but it is hard to lose, and after over 14 years of playing chess, I learned few valuable lessons. In this article, I will share them and show you them in action.

Lesson 1: You need to be careful when you are close to Win.

I can not tell you how many games I played where people snapped and made uncommonly silly mistakes when they were about to win, including me. The following game is a perfect example of that:

I was winning, obviously the game was about to end, what was the logical move, any move other than moving the queen to C8, that was my move, and I lost the queen for no apparent reason other than I thought it ended.

In life, it is the same, when you are close to win, either it is exam, job, deal or anything, you need to make sure you will close it, do not snap at the last mile, keep the same performance and attitude to make sure you will WIN and end up WINNING BIG.

Lesson2: Don’t give up; no matter how f**ked you are:

I can not tell how many games I played and when I kill the queen people give up and resign, toooo many games.

I found that people give up early and easily, and a lot of them snap (check lesson #1), my 2 cents in chess and life don’t give up, no matter how deep in shit you are in, think strategically and move tactically, the queen is not your strongest piece on the chess board; your brain is the strongest piece on the board and in life, use it.

Check this game:

I played this game against a extremely experienced player I took care and played the king gambit which should guarantee a exceptionally strong dynamic during the game as I was playing with white, but guess what, he set me up, and I lost the queen, and I couldn’t castle, I thought about giving up, but I didn’t, moved correctly and ended up advancing with 2 pieces.

So, chess and life are about your choices and moves, decide carefully.

Lesson3: Easy wins are not always easy

In chess, players target the rook as a highly valuable piece, it is the most valuable after the queen (of course skipping the king).

In a lot of games, you might see an easy target, but you need to be careful of what is the current situation, you need to think what others will do and don’t buy into a simple target because others might hit back hard.

check this game: http://goo.gl/n1nRGj

I was attacking the king side, obviously the other played didn’t think carefully about his current position, and bought the idea of forking the queen and rook, he ended up with a sad situation and position.

Also in life, don’t buy easy wins and target, they are lovely and sometimes you have/must use them, but you have to think ahead; what is behind them and will they benefit me?!

Lesson4: No matter how careful you are, sometimes you f*ck up:

I play careful and systematic games against experiences players, wither I play with white or black; I use a exceedingly systematic approach to avoid any surprises.

But no matter how careful I am, or you are or will, sometimes we do stupid stuff. We will always do stupid and foolish stuff, it is part of life.

check the following game:

again, king gambit, everything is progressing as should be, but in the seventh move I did what, I took the pawn with the bishop, what the hell I was thinking, I don’t know.

I had experienced player against me, I lost a valuable piece, and the game still in the beginning, it is a tough situation, but I decided to recover and come back.

as you can see, I pushed with some tactical moves to prevent the queen from castling, and strategically to attack with the Rook and queen.

I ended up with a check mate. that was impressive and valuable lesson to me. In life, sometimes you do stupid things, disastrous to the point you might think I can’t come back from this.

But when you face these situations, it is time to apologize to others or to yourself, put a plan, gather yourself and recover, you can always do that, no matter how hard the situation you are in.

Lesson5: Chess about the bottom line, so is life:

Chess about mating the king, it is not about taking the queen or having more pawns, it is about someone who wins at the end, simple as that.

A lot of people forget this rule; I can’t tell how many time people ran after a hanging pawn or a piece and had to pay the price for it.

check this game: http://goo.gl/xWt4Kb

the guy went after the hanging pawn; I lined up the 2 rooks and the queen, setup the attack and launched it. he paid the price.

same in life, it doesn’t matter how many presentation you have done in your job, how many hour you worked; if you don’t have results, it is useless.

You receive error message : ERROR: MsiGetActiveDatabase() failed. Trying MsiOpenDatabase(). while installing VMware SRM 5.5 and installation fails

If you are installing VMware SRM 5.5 you might get the following error message in installer:

Can not start VMware Site Recovery Manager Service.

when digging in the installation logs you will find the following error message:

ERROR: MsiGetActiveDatabase() failed. Trying MsiOpenDatabase().

To fix this issue, change the logon on the VMware Site Recovery Manager service from Local System to an Account with the DB Owner on the database.

vCloud Director automation via Orchestrator, Automating Org,vDC,vApp Provisioning via CSV Part4 – Adding Approval and Email Notifications

This is the final blog of this series, in the previous 3 parts:

we explored how to automate most of cloud provisioning elements including organizations, vDCs, vAPPs and Virtual machine and customizing their properties like adding vNICs, VHDs and memory/CPU.

In this final part, we will explore how we can add approval cycle to the above provisioning.

In our Scenarios, we will send an email notification to the administrator that include the CSV file used to generate the cloud as attachment, and include a hyperlink to approve/deny the request, let us see how we can do it.

Import the PowerShell Plugin:

We will use Powershell to send email notifications, I tried to use Javascripting but had no luck with attaching the CSV, Powershell comes to rescue here, so you need to import the powershell plugin to your Orchestrator through the Orchestrator configuration interface:

Once you import the powershell plugin, make sure to restart the VCO.

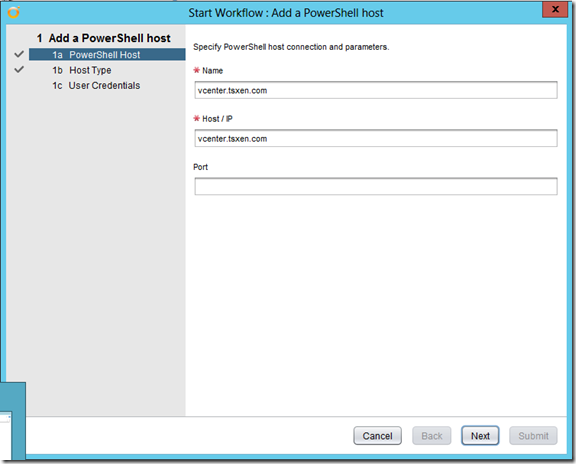

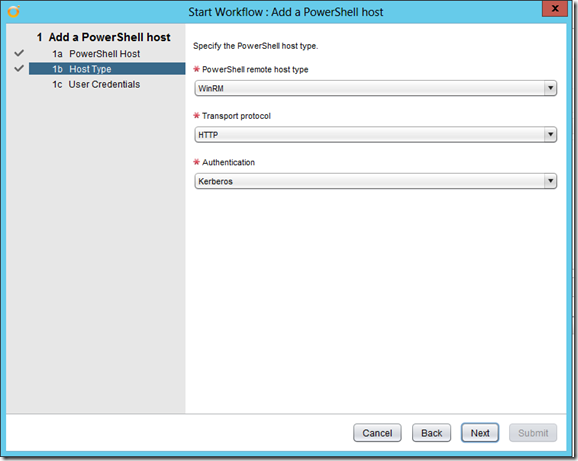

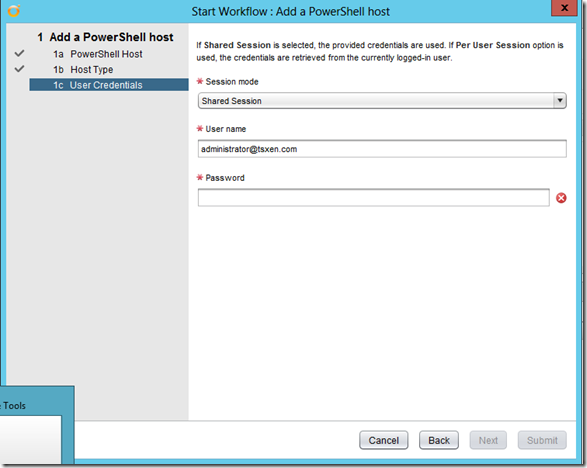

When you complete the restart, go to add a powershell host, you need to make sure that remote powershell is enabled on the server, once done kick of the add powershell host workflow:

if you are adding a kerberos host, make sure to type the username in UPN or email format otherwise you will get this weird error: Client not found in Kerberos database (6) (Dynamic Script Module name : addPowerShellHost#16)

Once added you are ready to go.

Building the Approval workflow:

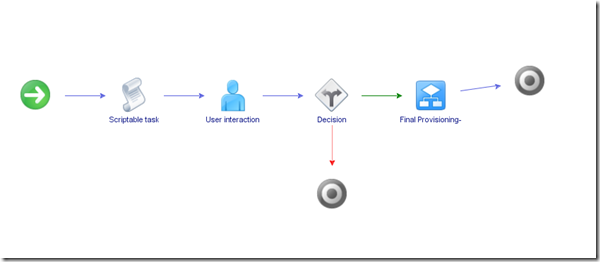

Build a workflow that includes user interaction and decision as following:

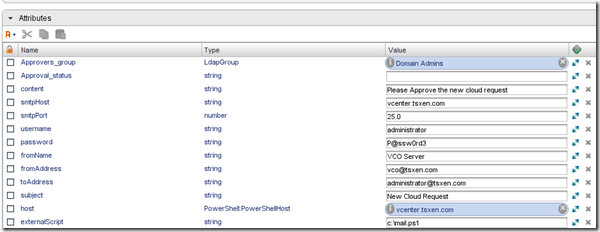

The attributes are defined as following:

the scriptable task, sends email notification with attachments as we said, let us the Javascript portion of it:

//var scriptpart0 = "$file =c:\\customer.csv"

// URL that will open a page directly on the user interaction, so that user can enter the corresponding inputs

var urlAnswer = workflow.getAnswerUrl().url ;

var orcdir = "C:\\orchestrator\\" ;

var fileWriter = new FileWriter(orcdir + name+".html")

var code = "<p>Click Here to <a href=\"" + urlAnswer + "\">Review it</a>";fileWriter.open() ;

fileWriter.writeLine(code) ;

fileWriter.close() ;var output;

var session;

try {

session = host.openSession();

var arg = name+".html";

Server.log (arg);

var script = ‘& "’ + externalScript + ‘" ‘ + arg;

output = System.getModule("com.vmware.library.powershell").invokeScript(host,script,session.getSessionId()) ;

} finally {

if (session){

host.closeSession(session.getSessionId());

}

}

The script attaches the CSV file, then starts the powershell script from the host and attaching the HTML file (in the arguments, this HTML file contains a link to approve the reqeust, and it was built in the above Javascript), let us see the powershell script:

Param ($filename)

$file = "c:\orchestrator\customer.csv"

$htmlfile = "C:\orchestrator\" + $filename

$smtpServer = "vcenter.tsxen.com"$att = new-object Net.Mail.Attachment($file)

$att1 = new-object Net.Mail.Attachment($htmlfile)$msg = new-object Net.Mail.MailMessage

$smtp = new-object Net.Mail.SmtpClient($smtpServer)

$msg.From = "emailadmin@test.com"

$msg.To.Add("administrator@tsxen.com")

$msg.Subject = "New Cloud is requested"

$msg.Body = "A new cloud service is request, attached the generation file, you can approve the request using the below link"

$msg.Attachments.Add($att)

$msg.Attachments.Add($att1)$smtp.Send($msg)

$att.Dispose()

Now you are ready to go, let see the outcome:

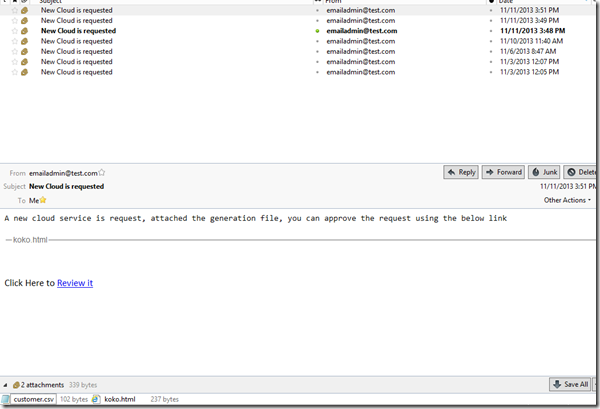

If you run the script successfully, you will receive the following email notification:

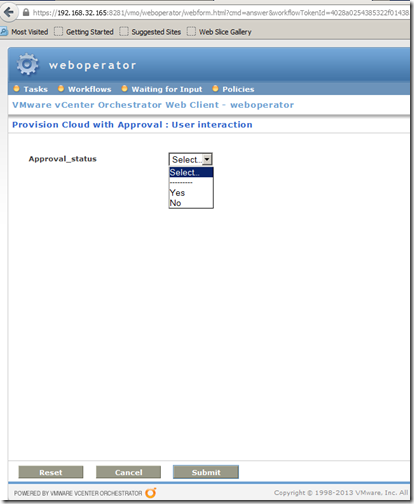

you can see the link to approve the request and the CSV file included in the email to approve it, if you click on the link, you can see the request and approve/deny it:

What is next:

You might think this is the end, however it is not, this blog series is the foundation of cloud automation and it is just a placeholder, cloud automation can go epic, here is some improvement suggestions for people who might want to take it further:

- Add error checking, the script is error checking free which might raise serious issues in production environment.

- Add More logging.

- Add automation to network provisioning and vShield operations.

- Automate application provisioning on top of provisioned VMs.

the above is a small list, we can spend years adding to this list, but those are the areas that I will be working on in the upcoming version of this script.

Till next.

Optimizing WAN Traffic Using Riverbed Steelhead–Part 2-Optimizing Exchange and MAPI traffic

In part one https://autodiscover.wordpress.com/2014/01/02/enhancing-wan-performance-using-riverbed-steelheadpart1-file-share-improvements/ we explored how we can optimize SMB/CIFS traffic using Steelhead appliances, in part 2 we will explore how we can optimize MAPI Connections.

WARNING: Devine Ganger, a fellow Microsoft Exchange MVP warned me that MAPI traffic optimization works in very specific scenarios, so you might want to go ahead and try it, but I checked the documentation an in my lab and it worked, of course my lab doesn’t reflect real life scenarios.

Joining Steelhead to Active Directory Domain:

In order to optimize MAPI traffic, you must join the Steelheads to Active Directory domain, this is because if you don’t you will see the MAPI traffic but Steelheads won’t be able to optimize it because it is encrypted, to allow Steelhead to Decrypt the traffic you need to join it to Active Directory and configure delegation.

as you can see above, the Steelhead compressed the traffic, but didn’t have a visibility on the contents and couldn’t optimize it further, now let us see what to do.

To join the Steelhead to Active Directory, visit the configuration/Windows Domain and add the Steelhead as RODC or Workstation if you prefer:

(You need to do this for both sides steelheads).

Once done, you will see the Steelhead appear in AD as RODC:

Now you need to configure account delegation, create a normal AD account with mailbox, I will call this account MAPI, once created, add the SPN to it as following:

setspn.exe -A mapi/delegate MAPI

Once done, Add the delegation to the Exchange MDB service in the delegation tab:

Once add, go to Optimization/Windows Domain Auth and add the account:

Test the delegation and make sure it works fine:

Now go to Optimizaiton/MAPI and enable Outlook Anywhere optimization and MAPI delegated Optimization:

And restart the optimization service, then configure the other Steelhead with the same config.

Now let us test the configuration and see if Steelhead works or not ![]() .

.

while checking the realtime monitoring, the first thing you will not that the appliance detected the traffic as Encrypted MAPI now:

I will send a 5 MB attachment from my client which resides at the remote branch to myself (sending and receiving), let us see the report statistics:

You can see now the some traffic flows, since it is decrypted now it has been compressed and reduced in size, the WAN traffic is 3 MB and WAN traffic is 1.8 MB, then while receiving the email, it received the email as 5 MB but can you see the WAN traffic, it is 145 KB only, because the attachment wasn’t sent over the WAN it was received by the client from the Steelhead.

now let us send the same attachment again and see how the numbers will move this time.

can you see the numbers, the WAN traffic was around 150 KB (the email header..etc), but the attachment didn’t travel over the WAN, it is clear the attachment traveled over the LAN in sending and receiving but didn’t traverse the WAN and the WAN traffic was massively reduced, impressive ha…